Public Cloud Architecture: A Comprehensive Guide to Building Scalable and Secure Cloud Solutions

Welcome to our blog article on public cloud architecture, where we explore the intricacies of designing and implementing scalable and secure cloud solutions. In today’s digital age, businesses are increasingly relying on cloud computing to store and access their data, run applications, and deliver services. Public cloud architecture plays a crucial role in enabling these functionalities while providing the flexibility, reliability, and cost-effectiveness that organizations require.

Understanding the Basics of Public Cloud Architecture

In this section, we delve into the fundamental concepts of public cloud architecture. We explain the key components such as virtualization, hypervisors, and the shared infrastructure model. By understanding these building blocks, you’ll gain a solid foundation for designing and optimizing your cloud environment.

The Role of Virtualization in Public Cloud Architecture

Virtualization is a foundational technology in public cloud architecture that allows for the creation of virtual instances of servers, storage, and networking resources. This enables efficient resource utilization and the ability to run multiple workloads on a single physical server. Through the use of hypervisors, which manage the virtualization process, organizations can achieve greater flexibility, scalability, and cost savings.

The Shared Infrastructure Model in Public Clouds

Public cloud providers adopt a shared infrastructure model, where multiple customers share the same physical resources, such as servers and storage systems. This approach allows for cost sharing and economies of scale, making cloud services more affordable for organizations of all sizes. However, robust security measures and isolation mechanisms are implemented to ensure that each customer’s data and applications remain protected and isolated from others.

Benefits and Challenges of Public Cloud Adoption

Here, we explore the numerous advantages public cloud architecture offers, including scalability, elasticity, and global reach. However, we also address the potential challenges and considerations to keep in mind when migrating to the public cloud. From security concerns to vendor lock-in risks, we provide insights to help you make informed decisions.

Scalability and Elasticity: Handling Fluctuating Workloads

One of the primary benefits of public cloud architecture is its ability to scale resources up or down based on demand. With scalability, organizations can easily accommodate spikes in traffic or sudden increases in workload without investing in additional hardware. Elasticity takes scalability a step further by automatically adjusting resources in real-time, ensuring optimal performance and cost efficiency. By leveraging the scalability and elasticity of the public cloud, businesses can dynamically respond to changing needs and avoid costly overprovisioning.

Global Reach and Accessibility

Public cloud providers operate data centers across the globe, allowing organizations to deploy their services closer to end-users in different geographic regions. This global reach enhances performance and reduces latency, providing a seamless experience for users regardless of their location. Additionally, public cloud services are accessible over the internet, enabling employees to work remotely and access applications and data from any device with an internet connection.

Security Considerations in the Public Cloud

While public cloud providers invest heavily in security measures, organizations must also take responsibility for securing their own data and applications. It’s crucial to understand the shared responsibility model, where the cloud provider is responsible for securing the underlying infrastructure, while customers are responsible for securing their data and applications. Implementing strong access controls, encryption, and regular security audits can help mitigate risks and ensure data confidentiality and integrity.

Vendor Lock-In Risks

When adopting public cloud services, organizations should be mindful of vendor lock-in risks. Vendor lock-in occurs when an organization becomes heavily dependent on a specific cloud provider’s proprietary technologies or APIs, making it difficult to migrate to another provider or bring workloads back in-house. To mitigate the risk of vendor lock-in, it’s advisable to adopt cloud-agnostic architectures, utilize open standards, and regularly assess the feasibility of multi-cloud or hybrid cloud approaches.

Designing Robust and Secure Cloud Networks

This section focuses on designing and implementing secure cloud networks. We discuss network segmentation, virtual private clouds (VPCs), and the importance of security groups. Additionally, we explore best practices for monitoring and managing network traffic, ensuring your data remains protected in the cloud.

Network Segmentation for Enhanced Security

Network segmentation involves dividing a cloud network into smaller, isolated segments to prevent unauthorized access and limit the potential impact of security breaches. By implementing logical boundaries, organizations can enforce stricter access controls and minimize the lateral movement of threats within the network. Network segmentation can be achieved through the use of virtual LANs (VLANs), subnets, or security groups, depending on the cloud provider’s offerings.

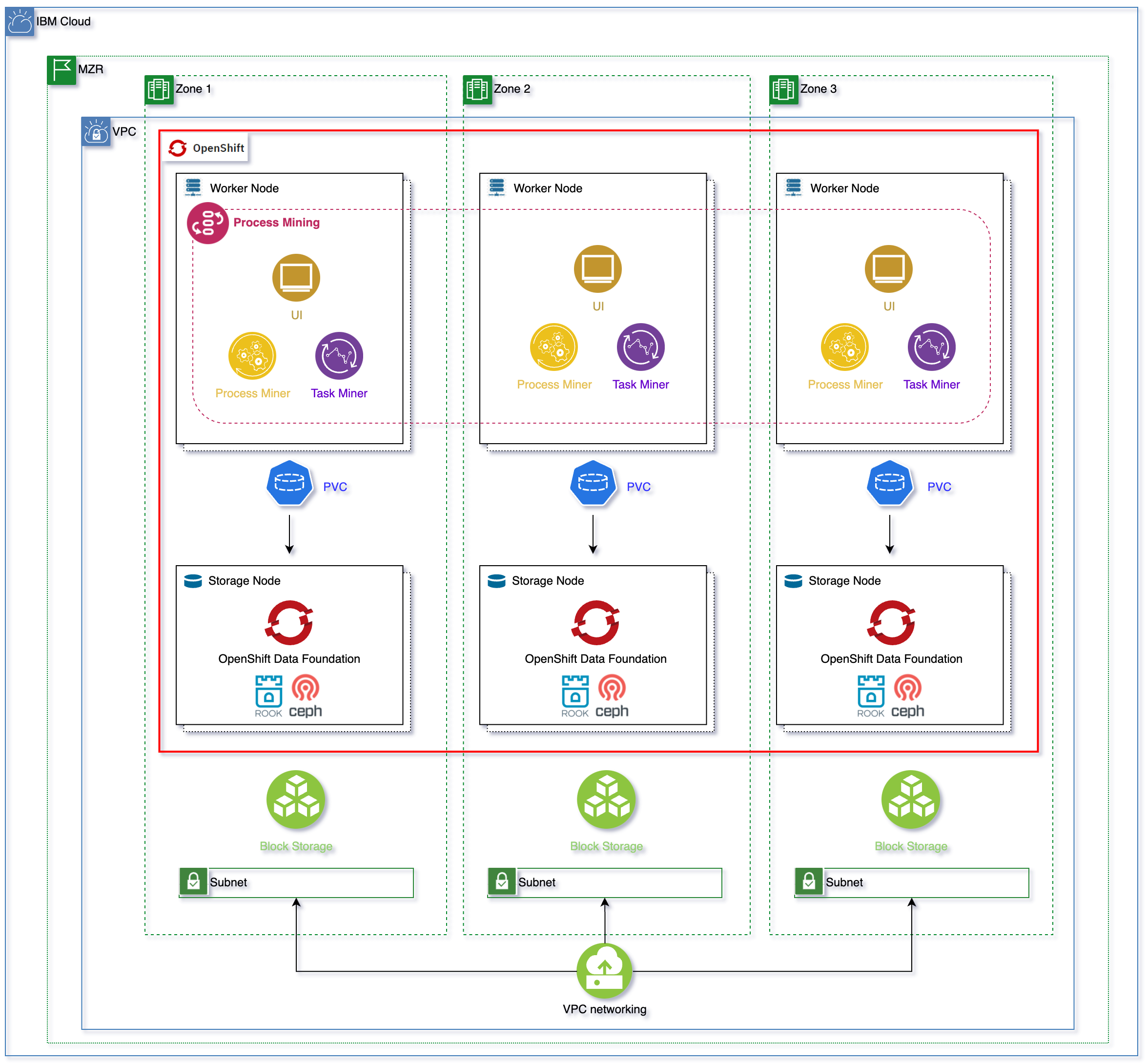

Virtual Private Clouds (VPCs): Isolating Your Cloud Environment

A Virtual Private Cloud (VPC) is a logically isolated section of a public cloud provider’s infrastructure, dedicated to a specific organization or customer. VPCs provide a higher level of isolation and control compared to a shared public cloud environment. Organizations can define their own IP address ranges, subnets, and routing tables within the VPC, ensuring that their data and resources are segregated from other customers. VPCs also allow for the creation of virtual private networks (VPNs) to establish secure connections between on-premises infrastructure and the cloud.

Security Groups: Fine-Grained Access Controls

Security groups are a fundamental component of cloud network security. They act as virtual firewalls, controlling inbound and outbound traffic to instances within a VPC or subnet. By defining security group rules, organizations can specify which protocols, ports, and IP ranges are allowed or denied access to their resources. This granular control ensures that only authorized entities can communicate with the cloud instances, reducing the attack surface and enhancing overall security.

Monitoring and Managing Network Traffic

Effective monitoring and management of network traffic are essential to detect and mitigate potential security threats or performance issues. Cloud providers offer various tools and services to monitor network traffic, such as flow logs, packet capture, and network performance monitoring. By leveraging these capabilities, organizations can gain visibility into their network traffic, identify anomalies, and take proactive measures to address any issues that arise.

Scalability and Elasticity in the Public Cloud

In this section, we delve into the concepts of scalability and elasticity and how they are achieved in public cloud architecture. We cover auto-scaling, load balancing, and horizontal and vertical scaling techniques. By optimizing your cloud infrastructure for scalability, you can handle varying workloads efficiently and cost-effectively.

Auto-Scaling: Handling Dynamic Workloads

Auto-scaling is a critical feature in public cloud environments that allows resources to automatically scale up or down based on predefined conditions or policies. By setting thresholds for CPU utilization, network traffic, or other metrics, organizations can ensure that additional resources are provisioned when needed and deprovisioned when idle. Auto-scaling enables efficient resource utilization, cost savings, and the ability to handle sudden spikes in demand without manual intervention.

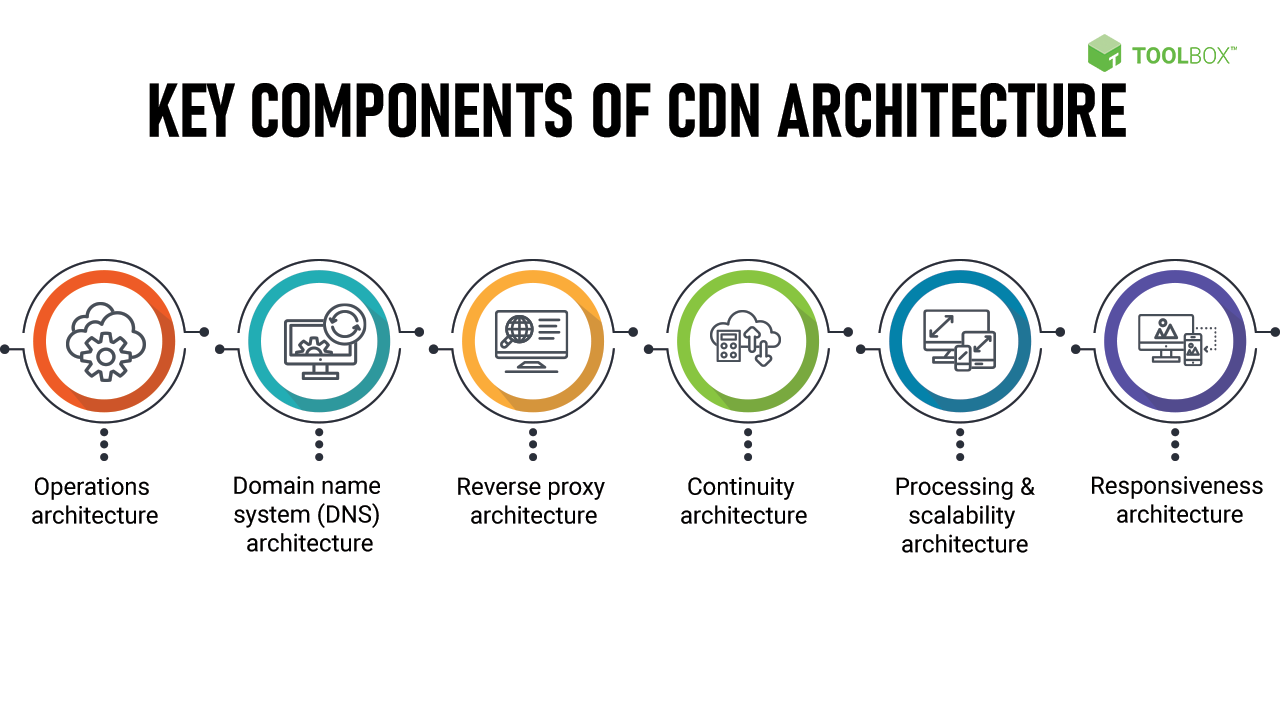

Load Balancing: Distributing Workloads

Load balancing is a technique used to distribute incoming network traffic across multiple servers or instances to prevent any single resource from becoming overloaded. In the public cloud, load balancers can be deployed at various levels, including the application layer, network layer, or even at the DNS level. Load balancing improves performance, enhances fault tolerance, and ensures that resources are utilized evenly, maximizing the scalability and availability of your cloud infrastructure.

Horizontal Scaling: Adding More Instances

Horizontal scaling, also known as scaling out, involves adding more instances or servers to accommodate increased workload demands. By distributing the workload across multiple instances, organizations can achieve better performance and handle higher traffic volumes. Horizontal scaling is particularly effective for stateless applications or those designed with a microservices architecture, where each instance can handle a specific task or function independently.

Vertical Scaling: Upgrading Instance Sizes

Vertical scaling, also known as scaling up, involves increasing the resources of an individual instance, such as CPU, memory, or storage capacity, to handle increased workload demands. Vertical scaling is suitable for applications that require more computational power or memory to deliver optimal performance. However, it’s important to consider the limitations of vertical scaling, as there may be practical constraints on how much an instance can be upgraded.

Data Storage and Management in the Cloud

Here, we explore different storage options available in the public cloud, such as object storage, block storage, and file storage. We discuss data redundancy, durability, and availability considerations. Additionally, we provide insights into choosing the right storage solutions for your specific workload requirements.

Object Storage: Scalable and Flexible

Object storage is a popular storage option in the public cloud, designed to store vast amounts of unstructured data, such as images, videos, and documents. It provides a scalable and flexible approach, where data is stored as objects with unique identifiers. Object storage offers high durability, as data is typically replicated across multiple geographically diverse locations. It also provides granular access controls and compatibility with various programming languages and platforms, making it an ideal choice for applications that require cost-effective, highly available, and scalable storage solutions.

Block Storage: Performance and Persistence

Block storage is a storage option that provides low-latency, high-performance access to data. It operates at the block level and is commonly used for databases, virtual machine disks, and

high-performance applications that require direct access to storage. Block storage offers features such as data persistence, allowing data to remain intact even if an instance is terminated. It also supports features like snapshots and cloning, making it easier to manage and replicate data. However, block storage is tied to a specific instance and may require additional configuration to ensure high availability and data redundancy.

File Storage: Shared Access and Compatibility

File storage provides a shared file system accessible by multiple instances, making it suitable for applications that require shared access to files or a traditional file system structure. It offers compatibility with existing applications and protocols, such as Network File System (NFS) or Server Message Block (SMB). File storage is commonly used for file sharing, content management systems, and applications that require a hierarchical file structure. However, it’s important to consider performance limitations and scalability constraints when designing your file storage architecture.

Data Redundancy and Durability

Ensuring data redundancy and durability is essential when storing data in the public cloud. Public cloud providers typically offer mechanisms, such as data replication and redundancy across multiple data centers, to protect against hardware failures or disasters. By leveraging these features, organizations can achieve high data availability and durability, minimizing the risk of data loss and ensuring business continuity.

Choosing the Right Storage Solutions

When selecting storage solutions for your specific workload requirements, it’s important to consider factors such as performance, scalability, cost, and data access patterns. Understanding the characteristics of different storage options, as well as the specific needs of your applications and data, will help you make informed decisions and optimize your cloud storage architecture.

High Availability and Disaster Recovery Strategies

In this section, we delve into designing high availability and disaster recovery solutions in the public cloud. We discuss fault tolerance, redundancy, and backup strategies. Whether you’re aiming for zero downtime or ensuring business continuity in the face of disasters, we provide valuable guidance to achieve your goals.

Fault Tolerance and Redundancy

Fault tolerance and redundancy are crucial components of a high availability architecture. By designing your cloud infrastructure with a focus on fault tolerance, you can ensure that your applications and services remain operational even if individual components or instances fail. This can be achieved through techniques such as deploying redundant instances across different availability zones, implementing load balancing, and using automated monitoring and recovery mechanisms.

Backup and Restore Strategies

Implementing robust backup and restore strategies is essential for disaster recovery. Public cloud providers offer various backup options, including regular snapshots, incremental backups, and off-site replication. By establishing a backup schedule and defining recovery point objectives (RPOs) and recovery time objectives (RTOs), you can ensure that your data is protected and can be restored quickly in the event of a disaster or data loss.

Testing and Validating Disaster Recovery Plans

Having a disaster recovery plan is not enough; regular testing and validation are crucial to ensure its efficacy. By simulating potential disaster scenarios and executing recovery procedures, you can identify any weaknesses or gaps in your plan and make necessary adjustments. Regular testing also helps train your teams and familiarize them with the recovery process, enabling a swift and efficient response in case of a real disaster.

Geographic Redundancy and Multi-Region Deployments

To enhance the resilience of your cloud infrastructure, consider deploying your resources across multiple geographic regions offered by your cloud provider. By leveraging multi-region deployments, you can ensure that your applications and data are replicated and available in different locations, reducing the risk of service interruptions due to regional outages or disasters. However, it’s important to balance the benefits of geographic redundancy with the associated costs and complexities.

Security and Compliance in the Public Cloud

This section focuses on the critical aspect of security and compliance in public cloud architecture. We explore various security controls, encryption techniques, and identity and access management (IAM) solutions. Moreover, we address compliance requirements and offer tips for ensuring your cloud environment meets industry standards.

Implementing Strong Access Controls

Access controls play a vital role in securing your cloud environment. By implementing strong authentication mechanisms, such as multi-factor authentication (MFA) and strong passwords, you can prevent unauthorized access to your resources. Additionally, implementing fine-grained access controls through IAM solutions allows you to define and enforce permissions at a granular level, ensuring that only authorized individuals or entities can access and modify your data and applications.

Data Encryption in Transit and at Rest

Encrypting data in transit and at rest is essential to protect sensitive information from unauthorized access. Public cloud providers offer encryption capabilities, allowing you to encrypt data before it leaves your premises and remain encrypted while stored in the cloud. By leveraging encryption technologies and best practices, such as using strong encryption algorithms and managing encryption keys securely, you can ensure the confidentiality and integrity of your data.

Security Monitoring and Incident Response

Continuous security monitoring and incident response capabilities are crucial for detecting and responding to security threats in real-time. Public cloud providers offer various security monitoring tools and services, such as log management, threat detection, and intrusion detection systems (IDS). By leveraging these capabilities and implementing robust incident response procedures, organizations can quickly identify and mitigate security incidents, minimizing the impact on their cloud environment.

Compliance Considerations

Compliance with industry regulations and standards is a critical requirement for many organizations. Public cloud providers offer compliance certifications for various frameworks, such as PCI DSS, HIPAA, and GDPR. By choosing a cloud provider that aligns with your specific compliance requirements and implementing appropriate security controls, you can ensure that your cloud environment meets the necessary compliance standards.

Optimizing Performance and Cost Efficiency

Here, we discuss strategies to optimize performance and cost efficiency in the public cloud. We cover topics such as resource utilization, workload balancing, and monitoring. By implementing these optimization techniques, you can enhance user experience while keeping your cloud costs under control.

Optimizing Resource Utilization

Efficient resource utilization is key to achieving cost savings and maximizing performance in the public cloud. By analyzing resource usage patterns, organizations can identify underutilized or overprovisioned resources and make necessary adjustments. Techniques such as rightsizing instances, implementing auto-scaling, and utilizing serverless computing can help optimize resource allocation and minimize waste.

Workload Balancing and Performance Optimization

Load balancing techniques, such as distributing workloads across multiple instances or leveraging content delivery networks (CDNs), can help optimize performance and improve user experience. By evenly distributing traffic and offloading content delivery to edge locations, organizations can reduce latency, improve response times, and handle higher traffic volumes more efficiently.

Monitoring and Performance Optimization

Proactive monitoring and performance optimization are crucial to ensure optimal performance and cost efficiency in the public cloud. By leveraging monitoring tools and services provided by your cloud provider, organizations can gain insights into resource utilization, application performance, and cost patterns. This data can be used to identify bottlenecks, optimize configurations, and make informed decisions to improve performance and control costs.

Cost Optimization Strategies

Controlling costs in the public cloud is an ongoing process. By leveraging cost optimization strategies, such as utilizing spot instances, optimizing storage costs, and implementing cost allocation tags, organizations can achieve significant cost savings. It’s important to regularly analyze cost reports, monitor spend, and adjust resource allocation and usage to align with business needs and budget constraints.

DevOps and Automation in Public Cloud Environments

This section explores the integration of DevOps practices and automation in public cloud architecture. We discuss the benefits of continuous integration and continuous deployment (CI/CD), infrastructure as code (IaC), and orchestration tools. By embracing automation, you can streamline your cloud operations and accelerate software delivery.

Continuous Integration and Continuous Deployment (CI/CD)

Continuous Integration and Continuous Deployment (CI/CD) practices enable organizations to automate the software development and release processes. By implementing CI/CD pipelines, developers can integrate code changes frequently, automatically build and test applications, and deploy them to production environments. This approach improves agility, reduces manual errors, and accelerates time-to-market for new features and updates.

Infrastructure as Code (IaC)

Infrastructure as Code (IaC) is a methodology that enables the provisioning and management of infrastructure resources through machine-readable configuration files. By defining infrastructure configurations as code, organizations can version control and automate the provisioning and management of resources. IaC tools, such as Terraform or CloudFormation, allow for consistent and repeatable deployments, reducing manual effort, and ensuring infrastructure consistency across environments.

Orchestration Tools for Cloud Management

Cloud orchestration tools provide automation and management capabilities for cloud resources, allowing organizations to define, deploy, and manage complex cloud architectures. These tools enable the automation of resource provisioning, configuration management, and application deployment workflows. By leveraging orchestration tools, organizations can achieve consistent deployments, improved scalability, and simplified management of their cloud environments.

Monitoring and Automation for Operations

Monitoring and automation tools play a crucial role in managing and operating cloud environments. By implementing monitoring solutions that provide real-time insights into the health and performance of your infrastructure, you can proactively identify issues and automate remediation actions. Automation scripts, triggered by monitoring alerts orevents, can help automate routine operational tasks, such as scaling resources, restarting instances, or performing system backups. This reduces manual effort, improves operational efficiency, and allows your teams to focus on more strategic tasks.

Future Trends and Innovations in Public Cloud Architecture

In this final section, we take a glimpse into the future of public cloud architecture. We discuss emerging trends, such as serverless computing, edge computing, and hybrid cloud models. By staying informed about the latest advancements, you can prepare your organization for the next wave of cloud computing technologies.

Serverless Computing: Focusing on Code, Not Infrastructure

Serverless computing, also known as Function as a Service (FaaS), is a paradigm shift in cloud computing where developers can focus solely on writing and deploying code, without the need to manage underlying infrastructure. In serverless architectures, applications are divided into small, event-driven functions that are executed in response to specific triggers. This approach offers scalability, cost efficiency, and simplified development, as resources are provisioned and billed based on actual usage.

Edge Computing: Moving Processing Closer to the Source

Edge computing brings computational power and data storage closer to the source of data generation, reducing latency and improving response times. By leveraging edge computing, organizations can process and analyze data closer to where it is collected, enabling real-time insights and faster decision-making. This is particularly valuable in applications that require low-latency interactions, such as IoT devices, autonomous vehicles, and real-time analytics.

Hybrid Cloud Models: Bridging On-Premises and Public Cloud

Hybrid cloud models combine the benefits of public cloud services with on-premises infrastructure, allowing organizations to leverage the best of both worlds. By adopting a hybrid approach, organizations can maintain control over sensitive data and critical workloads while taking advantage of the scalability and flexibility offered by the public cloud. Hybrid cloud architectures enable seamless workload migration, workload portability, and the ability to optimize cost and performance based on specific requirements.

Advancements in Machine Learning and AI

Machine learning and artificial intelligence (AI) are transforming the way organizations leverage data in the cloud. Public cloud providers offer a wide range of AI and machine learning services, such as image and speech recognition, natural language processing, and predictive analytics. These services enable organizations to extract valuable insights from large datasets, automate processes, and enhance decision-making capabilities.

Blockchain and Distributed Ledger Technologies

Blockchain and distributed ledger technologies are gaining traction in the public cloud space, enabling secure and transparent data transactions and verifications. Public cloud providers are offering blockchain-as-a-service (BaaS) solutions, simplifying the deployment and management of blockchain networks. These technologies have the potential to revolutionize industries by enabling secure and tamper-proof digital identities, supply chain management, and decentralized applications.

In conclusion, this comprehensive guide has provided you with a deep understanding of public cloud architecture. From the basics to advanced strategies, you are equipped with the knowledge to build scalable, secure, and cost-effective cloud solutions. By embracing the power of the public cloud, your organization can unlock new possibilities and drive innovation in the digital era. As the cloud computing landscape continues to evolve, staying informed about emerging trends and innovations will be crucial for staying ahead of the curve and maximizing the benefits of the public cloud.