Understanding Cloud Server Architecture Diagram: A Comprehensive Guide

Welcome to our comprehensive guide on cloud server architecture diagram. In this article, we will delve into the intricacies of cloud server architecture, exploring its various components and how they work together to provide a reliable and scalable infrastructure for businesses. Whether you are a beginner looking to understand the basics or an experienced professional seeking to enhance your knowledge, this guide has got you covered.

What is Cloud Server Architecture?

In the ever-evolving world of technology, cloud server architecture has emerged as a vital element for businesses seeking a flexible and scalable IT infrastructure. It refers to the design and structure of the servers and systems that enable cloud computing services. Cloud server architecture involves various components, including virtual machines, storage systems, networking components, and load balancers, all working together to deliver computing resources on-demand.

The Advantages of Cloud Server Architecture

Cloud server architecture offers numerous advantages over traditional physical servers. Firstly, it provides scalability, allowing businesses to easily scale their resources up or down based on demand. This flexibility ensures optimal resource allocation and cost-efficiency. Secondly, cloud server architecture offers high availability, as data is stored across multiple servers, reducing the risk of downtime. Additionally, cloud server architecture provides enhanced security measures, such as encryption and access controls, to protect sensitive data. Lastly, cloud server architecture enables easy management and maintenance, as tasks such as software updates and infrastructure monitoring can be automated.

The Importance of Cloud Server Architecture Diagrams

Cloud server architecture diagrams play a crucial role in understanding and visualizing complex systems. These visual representations help in designing, implementing, and troubleshooting cloud server architectures effectively. By visually mapping out the components and their relationships, stakeholders can gain a better understanding of the overall system and its dependencies. This aids in identifying potential bottlenecks, optimizing performance, and ensuring the security and reliability of the cloud infrastructure.

Designing an Effective Cloud Server Architecture Diagram

When creating a cloud server architecture diagram, it is essential to consider the intended audience and the level of detail required. A well-designed diagram should clearly depict the various components, their connections, and their interactions within the architecture. Utilizing standard symbols and notations, such as boxes for servers and lines for network connections, ensures consistency and ease of understanding. Additionally, labeling each component and providing relevant descriptions can enhance comprehension, especially for those unfamiliar with the specific architecture.

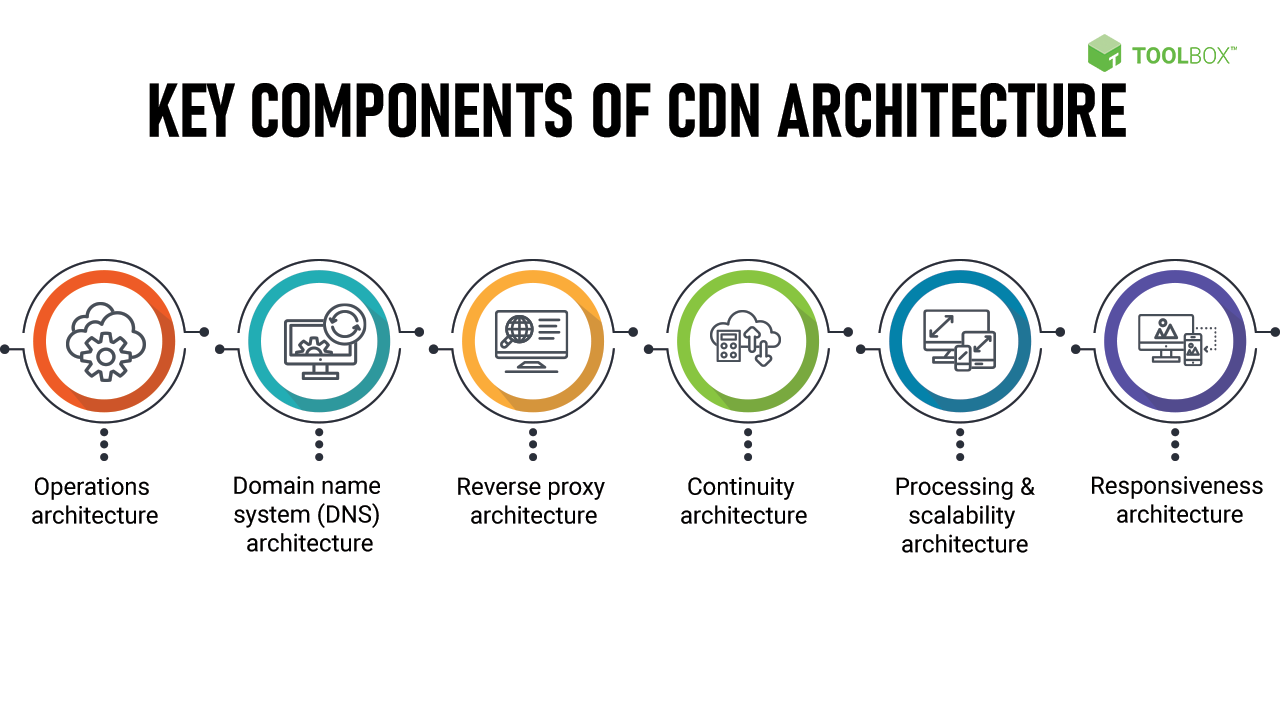

Key Components of Cloud Server Architecture

Understanding the key components of cloud server architecture is crucial for designing and managing a robust and scalable infrastructure. Let’s explore the main components:

1. Virtual Machines (VMs)

Virtual machines serve as the building blocks of cloud server architecture. They are software emulations of physical servers that run multiple instances on a single physical machine. VMs provide isolation, allowing different applications and operating systems to run independently while sharing the underlying hardware resources. This flexibility enables businesses to allocate resources efficiently and easily scale their computing capacity.

2. Hypervisors

Hypervisors, also known as virtual machine monitors, are software or firmware that enables the creation and management of virtual machines. They abstract the underlying hardware and allocate resources to VMs, ensuring efficient utilization of the physical server. Hypervisors also offer features like live migration, which allows VMs to be moved between physical servers without downtime.

3. Load Balancers

Load balancers distribute incoming network traffic across multiple servers to ensure optimal resource utilization and prevent overloading. They act as intermediaries between clients and servers, routing requests to the most suitable server based on various factors, such as server health, capacity, and proximity. Load balancers enhance reliability and scalability by evenly distributing the workload and redirecting traffic in case of server failures.

4. Storage Systems

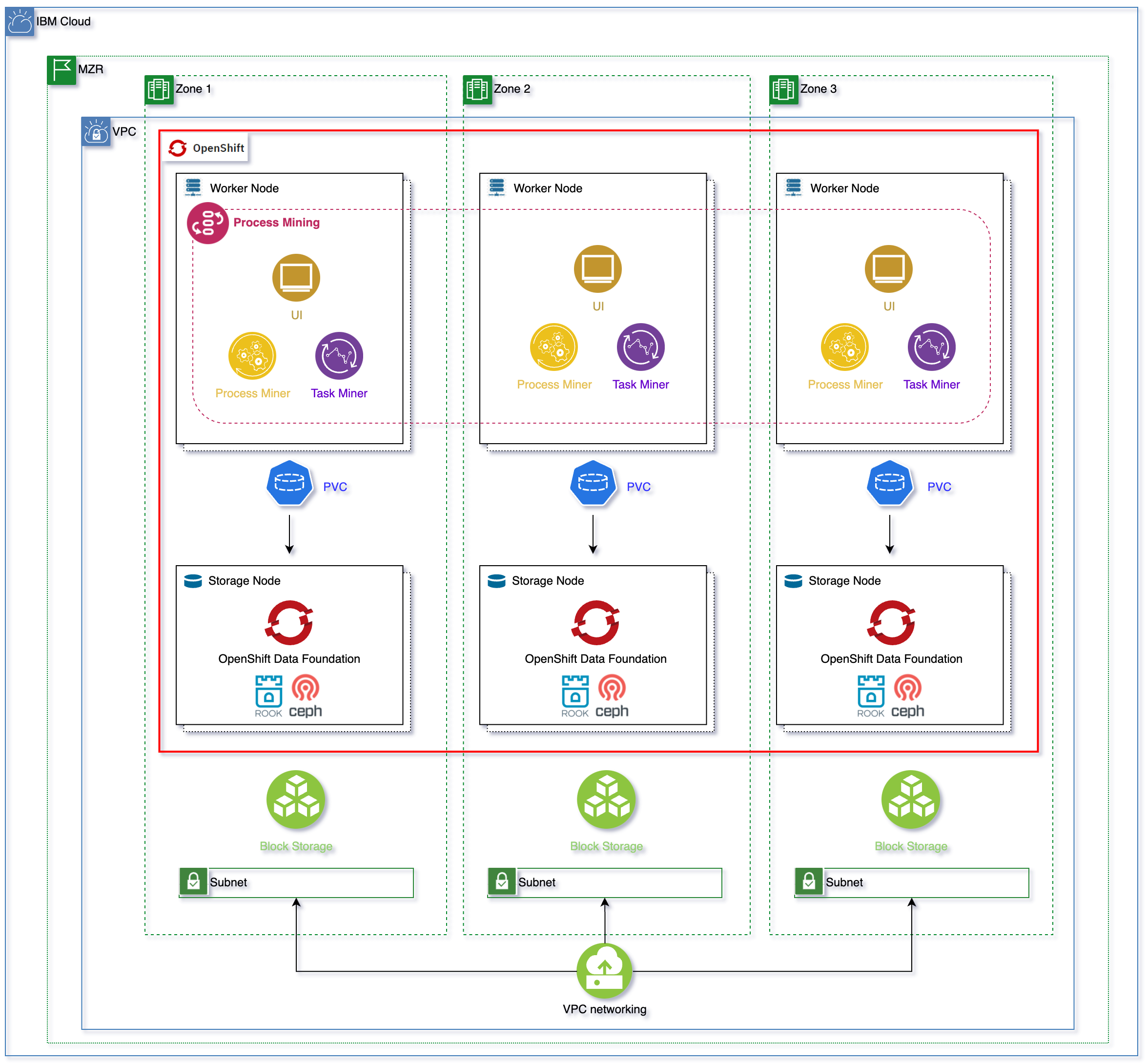

Storage systems in cloud server architecture are responsible for storing and retrieving data. They can be categorized into two main types: block storage and object storage. Block storage provides storage volumes that can be attached to virtual machines, allowing them to store and access data like a physical hard drive. Object storage, on the other hand, stores data in a distributed manner and is accessed via APIs. It is commonly used for storing large amounts of unstructured data, such as images, videos, and documents.

5. Networking Components

Networking components in cloud server architecture facilitate communication between various servers and services within the infrastructure. These components include routers, switches, firewalls, and virtual private networks (VPNs). Routers enable the transfer of data packets between different networks, while switches connect devices within a network. Firewalls enforce security policies by monitoring and controlling incoming and outgoing network traffic. VPNs provide secure remote access to the cloud infrastructure, allowing users to connect securely from any location.

Designing a Secure Cloud Server Architecture

Security is a critical aspect of cloud server architecture. When designing a secure architecture, several considerations must be taken into account:

1. Identity and Access Management (IAM)

Implementing a robust IAM system is essential for controlling access to the cloud infrastructure. This involves defining roles, permissions, and access policies for different users and services. By enforcing the principle of least privilege, businesses can ensure that only authorized individuals or systems can access sensitive resources, reducing the risk of data breaches.

2. Encryption

Encrypting data at rest and in transit is vital to protect sensitive information from unauthorized access. Data encryption ensures that even if data is intercepted or compromised, it remains unreadable without the appropriate decryption keys. Utilizing strong encryption algorithms and regularly rotating encryption keys enhances the security of the cloud infrastructure.

3. Network Security

Implementing robust network security measures is crucial to protect the cloud infrastructure from external threats. This includes deploying firewalls, intrusion detection and prevention systems (IDPS), and network segmentation. Firewalls monitor and control incoming and outgoing network traffic, while IDPSs detect and respond to potential attacks. Network segmentation separates different parts of the infrastructure, limiting the impact of a security breach.

4. Data Protection Measures

In addition to encryption, implementing data protection measures such as regular backups, disaster recovery plans, and data replication enhances the resiliency of the cloud infrastructure. Backups ensure that data can be restored in case of accidental deletion or hardware failures. Disaster recovery plans outline the steps to be taken in the event of a catastrophic incident, enabling businesses to recover quickly and minimize downtime.

Scaling and Elasticity in Cloud Server Architecture

One of the key advantages of cloud server architecture is its ability to scale resources horizontally and vertically, providing businesses with the flexibility to handle fluctuating workloads. Let’s explore the scaling techniques:

1. Horizontal Scaling

Horizontal scaling, also known as scaling out, involves adding more instances of servers to distribute the workload. This can be achieved by adding more virtual machines or containers as demand increases. Horizontal scaling allows businesses to handle increased traffic and ensures that resources are evenly distributed, preventing bottlenecks.

2. Vertical Scaling

Vertical scaling, also known as scaling up, involves increasing the capacity of individual servers by adding more resources, such as CPU, RAM, or storage. This is suitable for workloads that require more processing power or memory. Vertical scaling enables businesses to handle resource-intensive applications without the need for additional servers.

3. Auto-Scaling Groups

Auto-scaling groups automate the process of scaling resources based on predefined conditions, such as CPU utilization or network traffic. These groups monitor the workload and automatically add or remove instances as needed. Auto-scaling ensures that the cloud infrastructure can adapt to changing demand, providing optimal performance and cost-efficiency.

4. Load Balancing

Load balancers play a crucial role in scaling and distributing traffic across multiple servers. By evenly distributing incoming requests, load balancers prevent any single server from becoming overwhelmed. This ensures that the workload is distributed efficiently and allows businesses to handle increased traffic without affecting performance.

5. Elastic IP Addresses

Elastic IP addresses provide businesses with a static public IP that can be easily associated with instances. This allows for seamless replacement or scaling of instances without the need to update DNS records or reconfigure applications. Elastic IP addresses are particularly useful when instances need to be replaced or scaled up or down frequently.

High Availability and Fault Tolerance in Cloud Server Architecture

Ensuring high availability and fault tolerance is crucial for any mission-critical application. Let’s explore the strategies and technologies used in cloud server architecture:

1. Data Replication

Data replication involves creating copies of data across multiple servers or geographic locations. By replicating data, businesses can ensure that even if one server or location fails, data remains accessible from other replicas. This redundancy reduces the risk of data loss and enables continuous availability of services.

2. Fault-Tolerant Networking

Fault-tolerant networking involves implementing redundant network connections and devices to eliminate single points of failure. Redundant network switches, routers, and network interface cards (NICs) ensure that if one component fails, traffic can be automatically rerouted through alternate paths. This redundancy enhances the reliability and availability of the cloud infrastructure.

3. Multiple Availability Zones

3. Multiple Availability Zones

Multiple availability zones (AZs) are physically separate data centers within a region. Each availability zone is designed to be isolated from failures in other zones, providing redundancy and fault tolerance. By deploying resources across multiple availability zones, businesses can ensure that if one zone experiences an outage, their services can seamlessly failover to another zone, minimizing downtime and ensuring continuous availability.

4. Load Balancers

Load balancers play a crucial role in achieving high availability and fault tolerance. By distributing incoming traffic across multiple servers or instances, load balancers ensure that even if one server fails, the workload can be automatically shifted to other healthy servers. Load balancers continuously monitor the health of servers and route traffic only to those that are functioning properly, minimizing the impact of failures and maximizing uptime.

5. Automated Monitoring and Remediation

Automated monitoring tools are essential for detecting and resolving issues in real-time. By continuously monitoring the health and performance of servers, applications, and networking components, businesses can proactively identify and address potential problems. Automated remediation systems can be set up to automatically respond to detected issues, such as restarting failed instances or scaling resources to meet increased demand. This automation reduces the need for manual intervention and ensures that the cloud infrastructure remains highly available and fault-tolerant.

Monitoring and Performance Optimization in Cloud Server Architecture

Monitoring and optimizing the performance of your cloud infrastructure is essential for delivering a seamless user experience. Let’s explore some key aspects:

1. Resource Monitoring

Effective resource monitoring involves tracking the utilization and performance of various resources, including CPU, memory, disk storage, and network bandwidth. By monitoring resource usage, businesses can identify bottlenecks and optimize resource allocation to ensure optimal performance. Utilizing monitoring tools that provide real-time insights and alerts allows for proactive management of resources.

2. Application Performance Monitoring (APM)

APM tools track and analyze the performance of applications running in a cloud environment. They provide insights into response times, transaction volumes, and other metrics that affect the user experience. By monitoring application performance, businesses can identify areas for improvement, optimize code, and enhance the overall user experience.

3. Load Testing and Performance Optimization

Load testing involves simulating high levels of user traffic to evaluate how the system performs under peak loads. By conducting load testing, businesses can identify performance bottlenecks and optimize the infrastructure and applications accordingly. Performance optimization techniques may include caching, database tuning, code optimization, and load balancing to ensure the system can handle increased traffic without degradation.

4. Proactive Scaling

Proactive scaling involves monitoring the system’s performance metrics and scaling resources proactively before reaching capacity limits. By analyzing historical data and predicting future demand patterns, businesses can scale resources in advance to ensure optimal performance during peak periods. Proactive scaling allows for seamless handling of increased traffic and prevents performance issues or downtime caused by resource constraints.

Cost Optimization in Cloud Server Architecture

While cloud server architecture offers scalability and flexibility, it is crucial to optimize costs to ensure efficient resource utilization. Let’s explore some cost optimization strategies:

1. Reserved Instances

Reserved instances allow businesses to commit to using specific instances for a designated period, typically one to three years. By committing to longer-term usage, businesses can benefit from significantly reduced hourly rates compared to on-demand instances. Reserved instances are suitable for workloads with predictable and steady resource requirements.

2. Spot Instances

Spot instances are spare computing capacity instances that are available at significantly reduced prices compared to on-demand instances. The pricing for spot instances fluctuates based on supply and demand. Businesses can leverage spot instances for non-critical or fault-tolerant workloads, as they can be terminated with short notice if the price exceeds the bid. Spot instances offer potential cost savings but require careful management and the ability to handle interruptions.

3. Rightsizing

Rightsizing involves matching the resources allocated to instances with their actual requirements. By analyzing resource utilization metrics, businesses can identify instances that are overprovisioned or underutilized. Downsizing or upsizing instances to align with workload demands can result in significant cost savings by optimizing resource allocation and minimizing waste.

4. Cost Monitoring and Analysis

Continuous monitoring and analysis of cost metrics are crucial for effective cost optimization. Utilizing cost management tools provided by cloud service providers, businesses can track their spending, identify cost drivers, and gain insights into resource usage patterns. By identifying areas of high cost or inefficient resource allocation, businesses can take appropriate actions to optimize costs without compromising performance and reliability.

Case Studies: Real-World Cloud Server Architecture Implementations

Real-world case studies provide valuable insights into how businesses have successfully implemented cloud server architectures. Let’s explore a few examples:

1. Case Study: E-commerce Scalability

A leading e-commerce platform faced challenges with handling peak traffic during seasonal sales. By implementing a cloud server architecture, they were able to seamlessly scale their infrastructure horizontally to accommodate increased demand. Load balancers distributed traffic across multiple instances, ensuring high availability and performance. The company achieved significant cost savings by utilizing auto-scaling groups to automatically add or remove instances based on traffic fluctuations.

2. Case Study: Data Analytics Platform

A data analytics company leveraged cloud server architecture to build a scalable and cost-effective platform. They utilized virtual machines to process large volumes of data, while load balancers ensured even distribution of processing tasks. By implementing a fault-tolerant networking setup across multiple availability zones, they ensured uninterrupted data processing and high availability. The company optimized costs by leveraging spot instances for non-critical workloads and rightsizing instances based on resource utilization.

3. Case Study: Software-as-a-Service (SaaS) Provider

A SaaS provider adopted a cloud server architecture to deliver their application to customers globally. They leveraged multiple availability zones to ensure high availability and fault tolerance. By implementing proactive scaling based on monitoring metrics, they were able to handle increased user demand without performance degradation. The company optimized costs by utilizing reserved instances for predictable workloads and regularly analyzing cost metrics to identify areas for optimization.

Future Trends and Innovations in Cloud Server Architecture

Cloud server architecture is continuously evolving, driven by technological advancements and changing business needs. Let’s explore some future trends and innovations:

1. Serverless Computing

Serverless computing, also known as Function-as-a-Service (FaaS), allows businesses to focus on writing and deploying code without the need to manage servers or infrastructure. With serverless computing, developers can execute functions in response to events, paying only for the actual execution time. This trend enables businesses to achieve even greater scalability and cost efficiency in their cloud server architectures.

2. Containerization

Containerization, using technologies like Docker and Kubernetes, allows for the efficient packaging and deployment of applications. Containers provide lightweight, isolated environments that can be easily moved between different cloud servers or environments. This flexibility and portability simplify the deployment and management of applications, making containerization a popular choice in cloud server architecture.

3. Edge Computing

Edge computing brings computing resources closer to the data source or end-users, reducing latency and improving performance. By processing data closer to where it is generated, businesses can achieve faster response times and reduce the impact of network latency. Edge computing is particularly relevant for applications that require real-time processing, such as IoT devices and streaming services.

4. Machine Learning and Artificial Intelligence

Machine learning and artificial intelligence (AI) are increasingly being integrated into cloud server architectures. These technologies enable businesses to leverage advanced analytics, automate processes, and gain insights from large volumes of data. By integrating machine learning and AI capabilities into cloud server architecture, businesses can enhance decision-making, improve efficiency, and create personalized user experiences.

In conclusion, understanding cloud server architecture diagram is crucial for businesses aiming to leverage the power of cloud computing. By comprehending the key components, best practices, and future trends, you will be well-equipped to design, deploy, and manage a secure, scalable, and cost-effective cloud infrastructure. Remember to regularly update your knowledge as the field evolves to stay ahead in the ever-changing world of cloud server architecture.

Whether you are an IT professional, a business owner, or simply curious about cloud technology, this comprehensive guide has provided you with the necessary information to embark on your cloud server architecture journey. Start exploring, experimenting, and harnessing the full potential of the cloud for your organization’s success.